How to Detect and Block Bot Traffic From Website?

By Prosanjit Dhar

November 6, 2024

Last Modified: December 12, 2024

Maybe you’ve already broken out in a cold sweat over these bot traffic activities. No surprise there!

In recent days, this is one of the most frequently encountered issues on the web.

And without proper protection or steps, you really can’t escape them. They will keep coming to your site, skewing your analytics data, increasing server load, and even leading to security vulnerabilities.

All of these can become a real headache for you. But don’t panic!

In this blog, we’ll explore bot traffic, how to identify it, and, above all, what practical solutions are available for your WordPress.

What is bot traffic?

You may have noticed not all traffic to your website is real or human. Bot traffic basically refers to those non-human traffic generated by some automated scripts that continuously visit your website.

These bots often get a bad name because many of them engage in spammy activities, crawling your site and doing things you don’t want.

However, not all bots are harmful. Some are legitimate and help enhance website functionality by performing and monitoring tasks.

Therefore, you need to understand their types and behaviors to identify and block harmful bot traffic accurately.

Different types of bot traffic

Because of their distinct behaviors, it’s quite difficult to differentiate the bot traffic. To get rid of this confusion, we’ve made a list based on these bot types.

It will help you to identify which bots are good and which are spammy or malicious.

Good bot traffic

| Googlebot | Google’s primary crawler, is used to index web pages for Google search results. |

| Bingbot | Search engine crawler that is responsible for indexing pages for Bing search. |

| Yahoo Slurp | Yahoo’s web crawler for gathering information for Yahoo search results. |

| DuckDuckBot | This bot is from a privacy-focused search engine to index pages without tracking users. |

| Baidu Spider | China’s largest search engine Baidu uses this crawler to index content for Chinese-language searches. |

| Yandex Bot | Yandex’s main crawl which gathers content for its search index. |

| Facebook External Hit | Used by Facebook to gather data from web pages when links are shared on its platform. |

| Twitterbot | Fetches web page information to display rich previews when links are shared in tweets. |

| LinkedIn Bot | Responsible for fetching data to display previews when links are shared on LinkedIn. |

| Alexa Crawler | Used by Alexa to gather data on web traffic for ranking websites. |

| Pinterest Bot | Indexes images and links on websites to make them accessible on the Pinterest platform. |

| Feedfetcher | A bot used by various RSS feed readers to fetch and update RSS feed content from websites. |

| Ahrefs Bot | Crawls websites to gather data for backlink analysis, keyword research, and SEO insights. |

| SEMrush Bot | Used to collect data on keyword rankings, perform site audits, and conduct competitive analysis for SEO. |

Bad bot traffic

| Scraper Bots | Scrape content from websites without permission, often for unauthorized content reuse or data gathering. |

| Spambot | Generates spam content or comments on websites, filling forms or comment sections with unwanted ads and links. |

| DDoS Bots | A malicious attempt to disrupt normal traffic to a web property causing websites to become slow or crash. |

| Credential Stuffing Bots | Attempt to access accounts using lists of stolen usernames and passwords, leading to potential unauthorized access. |

| Spam Crawlers | Designed to collect email addresses and contact details, often for spamming purposes. |

| Malware Bots | Spread malware by exploiting website vulnerabilities, and infecting sites and potentially visitors’ devices. |

| Ad Fraud Bots | Generate fake ad clicks and impressions to defraud advertisers and manipulate advertising metrics. |

| Phishing Bots | Automate phishing attacks, tricking users into providing sensitive information like passwords and credit card details. |

| Botnets | Networks of compromised computers (often infected with malware) are controlled to perform various malicious activities in unison. |

| Fake Account Bots | Create fake accounts on social media, forums, and websites to spread spam, misinformation, or carry out other harmful activities. |

| DotBot | Aggressively crawls websites, often overwhelming servers and slowing down site performance. |

| Scrapy | An open-source web scraper bot is often used without permission, disregarding site permissions to gather content. |

| Xovibot | Known for scraping web content without consent, often blocked for its persistent and aggressive behavior. |

| Yandex Images Bot | Sometimes scrapes website images without respecting robots.txt, taking content without authorization. |

| Click Bots | Artificially generate ad clicks to manipulate PPC (pay-per-click) metrics, often leading to wasted ad budgets. |

| Skewed Analytics Bots | Create fake traffic to distort website analytics, making it difficult to analyze genuine visitor behavior. |

| Price Scraping Bots | Extract pricing data from e-commerce sites, often used by competitors or unauthorized resellers. |

So, what do we get? An initial introduction that shows a good bot performs helpful tasks that enhance, rather than disrupt, the user experience online.

Whereas a “bad” bot does just the opposite! It often carries malicious or even illegal intentions like stealing private information or disturbing your real traffic.

That’s why to distinguish them from your website’s usual traffic, you need to understand what behavior they mostly share while interacting with the website.

The common behaviors of bot traffic

Bot traffic shows dissimilar behavior patterns to human traffic. By observing these behaviors from your analytics, you can easily differentiate them.

- High page views: If you notice an unusual spike in your page views within a short period (thousands from the same user), then it’s a red flag. Real users typically don’t navigate that quickly.

- High bounce rate: Bots don’t engage with your content like real users do. They visit a page and leave immediately. It results in a high bounce rate on your analytics.

- Short session durations: Real visitors typically take their time exploring your site. But if you notice users popping in and out in just a blink (merely milliseconds or less ) that’s a strong sign you might be dealing with bots.

- Traffic spikes from unknown locations: If you suddenly see a lot of visitors from countries you don’t typically reach, that could be bots trying to access your site from random places.

- Junk conversions: Sometimes, bots fill out forms or sign up for things without any real intent. These “conversions” don’t translate to actual interest in your product or service, which can clutter your data.

- Spammy tendencies: Look out for repetitive comments or messages that seem too generic or unrelated to your content. Bots often leave spammy trails. That makes it clear they’re not real users.

How to detect bot traffic on your website?

You won’t have thousands of people in one location repeatedly clicking through your website while exhibiting a high bounce rate or trying to access your data. Perhaps it’s bot traffic trying to invade.

Eventually, that’s what we understand by studying their behavior.

But how can you investigate these patterns or block them from coming back?

Definitely, you need proper analytics or tools to help you in this situation.

Let’s take a closer look at the tools we can use!

1. Google Analytics

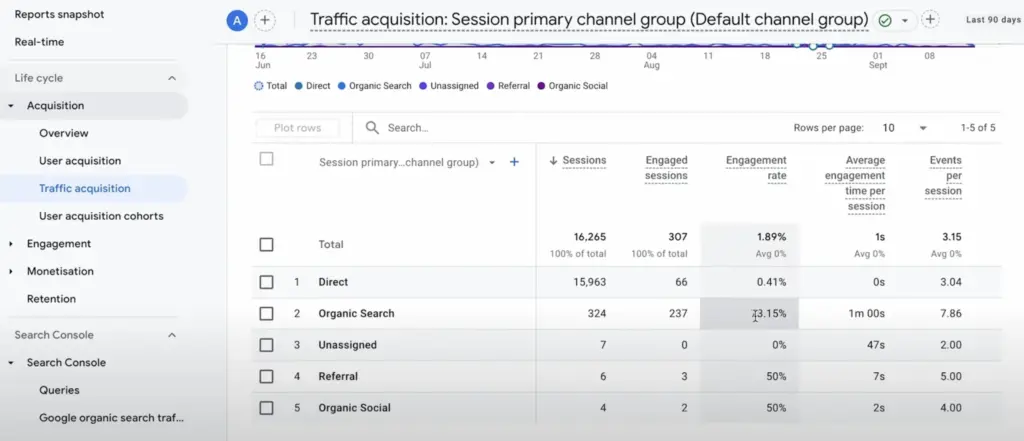

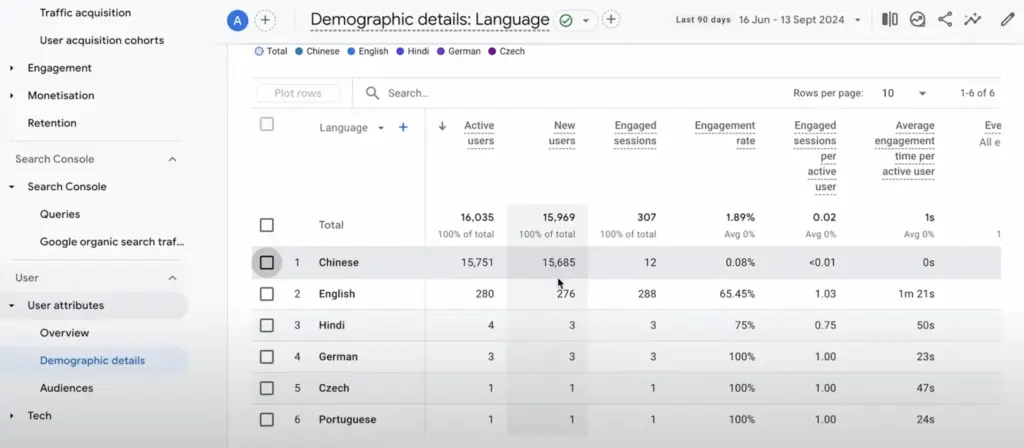

When it comes to tracking audiences or analyzing metrics, Google Analytics is a popular choice among webmasters. With it, you can easily check metrics like engaged sessions, bounce rate, and the IP channels of your audience.

To get started, simply register your website on Google Analytics and head to the user acquisition data to spot any unusual traffic patterns.

If you notice a large amount of traffic with “zero” engagement or strange session durations, it could very well be bot traffic coming to your site.

Another helpful clue is the language data. If you see a high volume of traffic with languages that don’t typically appear on your site, it’s a strong indication of bot activity.

Moreover, Google Analytics allows you to create segments to filter out suspicious traffic based on behavior patterns.

But if you’re not into Google Analytics, don’t worry! There are other options for you to investigate bot traffic too.

2. Cloudflare

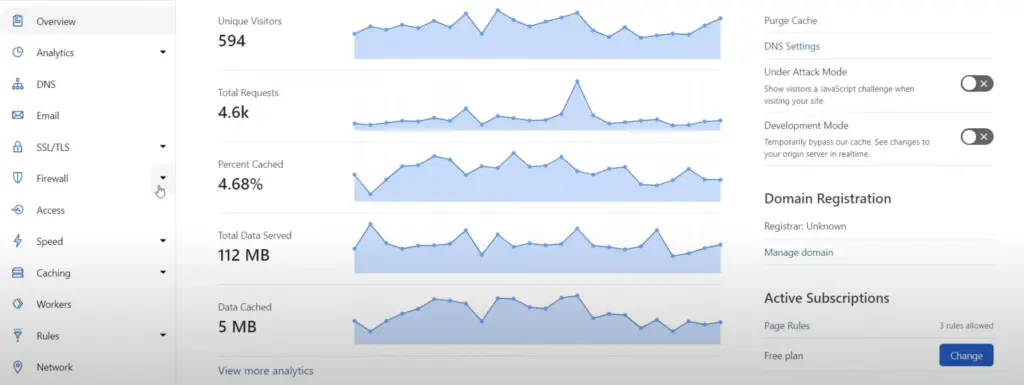

If you’re already familiar with Cloudflare, you can track incoming traffic and distinguish it more easily.

They offer dedicated sections for Bots and DDoS detection with their latest version.

To do that, just connect your WordPress site with the Cloudflare tool and enable the bot fight mode from the Bots section.

But that’s not over yet!

Cloudflare has taken spam traffic more seriously than others. They introduced an additional JavaScript challenge for bots to visit your website.

This means that if you enable the Under Attack Mode, Cloudflare will show a JavaScript challenge while visiting your site and include them in the bot list.

That’s how bot traffic can be tracked easily. But what if these results say you have bot traffic on your website?

Surely, you wanted to block them before anything bad happened.

For that, you should know the appropriate plugins to prevent them without further ado!

How to block bot traffic from websites?

There are many tools and plugins in the market that offer complete protection from bot traffic. But not everything is persuadable.

That’s why we made this list of security tools to guide you.

1. Wordfence Security Plugin

The reason behind opening Wordfence is it’s one of the most used WordPress security plugins in the market. And, it should be, because it’s hard to find this type of malware protection with a comfortable budget.

Besides detection, Wordfence also blocks malicious bots by IP, user agent, or country from the “Live Traffic” logs.

For basic setup, start by navigating to the “Firewall” settings in Wordfence and configure rules to block known bots.

2. IP Blacklisting via .htaccess

This is not a plugin, but a tool from the root directory that controls access rules for visitors. If you already have the bot traffic IP then you can manually block unwanted traffic from .htaccess.

To start the process, access your site’s .htaccess file through your hosting file manager or FTP. Then add code snippets to block specific IPs, for example:

<Limit GET POST>

order allow,deny

allow from all

deny from 123.456.789.000 # Example IP address

</Limit>

Important note: This method requires caution, as mistakes can impact your site’s availability.

3. reCAPTCHA (via WordPress plugin)

reCAPTCHA is also a renowned protection from Google to avoid spam and fraudulent activities. But it works especially on login and form pages.

So, for WordPress, you need to download Google Captcha plugin and enable it on login, registration, or comment forms. This will add a verification check that most bots can’t bypass.

But maybe it’s not fair to install an additional plugin just to use reCAPTCHA.

In this case, Fluent Forms can help WordPress users. This plugin offers both security options Honeypot and reCAPTCHA to detect bots and block them from invading.

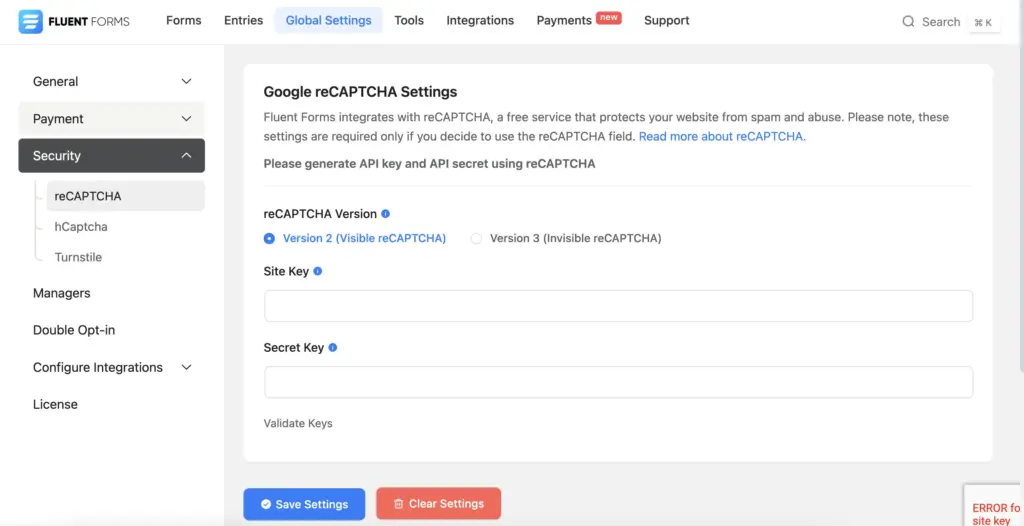

If you already have this plugin, a couple of simple steps can make you hassle-free. To enable reCaptcha or hCaptcha, Go to the Global Settings from the dashboard, and check the Security option.

Both reCaptcha and hCaptcha can be enabled from here. You need to provide the site key and the secret keys to activate them.

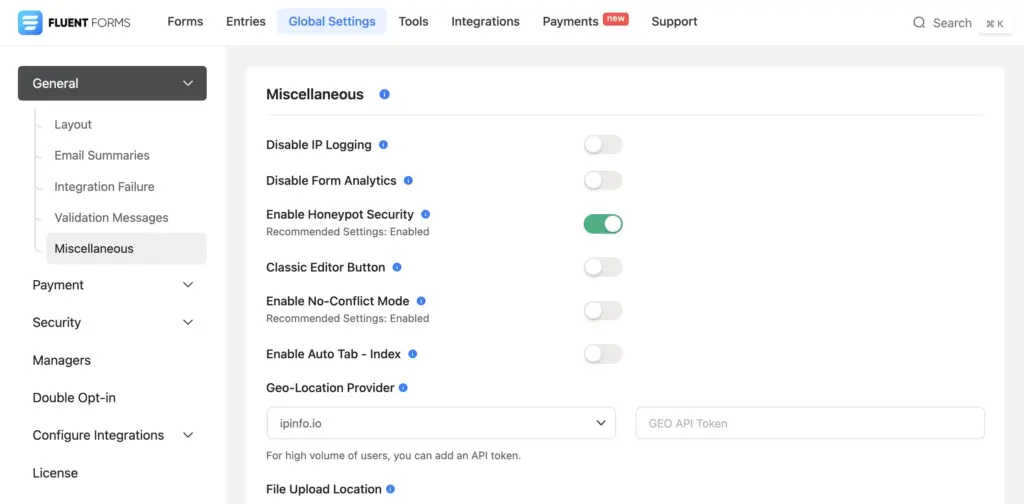

After enabling the reCAPTCHA protection, you can go ahead with the Honeypot security.

This security is a part of Fluent Forms Global Settings. From the dashboard, Click Miscellaneous and then enable Honeypot Security.

4. Robots.txt disallow

Lastly, you can disallow the bots access from the robots.txt file. This text file offers the option to allow, disallow, or deploy crawl delay on web bots.

To do that you can simply add the name of the bots with the appropriate directive of robots.txt file.

User-agent: BadBot1

Disallow: /page1

User-agent: BadBot2

Disallow: /page2

User-agent: BadBot2

Disallow: /page3

User-agent: BadBot2

Disallow: /page4

These directives will indicate the mentioned bots not to crawl the directory page in the robots.txt list.

However, bot traffic (bad bots) doesn’t comply with this text file most of the time. But you can study this full guide of robots.txt implementation to apply it to good bots.

How to Use the Robots.txt file on Your Website with a Step-by-Step Guide. Let’s Read!

Final thoughts

Website security is something we always need to prioritize. From selecting a monitoring tool to setting up strong firewall protection, every step counts.

Because outside there are a lot of people seeking one chance to heist your data and disrupt your online journey. But it doesn’t mean the web world is vulnerable to this bot traffic. There’s a solution for everything.

Now that you’ve learned the workaround from here, you’re better equipped to keep spammers at bay. Stay vigilant and choose the right tools to protect your website.

Leave a Reply